All orders Built, Shipped & Supported from within the European Union

All orders Built, Shipped & Supported from within the European Union

All orders Built, Shipped & Supported from within the European Union

All orders Built, Shipped & Supported from within the European Union

Up to 18 Cores, 3-Way SLI Graphics, Intel most Power Ever Xeon W Processors

Single 4th Gen Intel Xeon Scalable Gen4 Processor, GPU Computing Pedestal Supercomputer, 4x RTX GPU Cards

NVIDIA DGX Spark Founders Edition AI Supercomputer. DGX personal AI computer, designed to build and run AI.

Single AMD 9004 Series Gen 4 Processor, GPU Computing Pedestal Supercomputer, 3x RTX GPU Cards

Up to 64 Cores, supports 4-Way SLI and CrossFireX, Upto 256GB DDR4 4400 (OC) Memory, Dual 10GbE LAN, Intel Wifi, Bluetooth, 8-Channel High Definition Audio CODEC,

Dual 4th Gen Intel Xeon Scalable Gen4 Processor, GPU Computing Pedestal Supercomputer, 4x RTX GPU Cards

Up to 64 Cores, 4-Way SLI Graphics, The Most Powerful Workstation Processors Ever

NVIDIA Titan RTX |

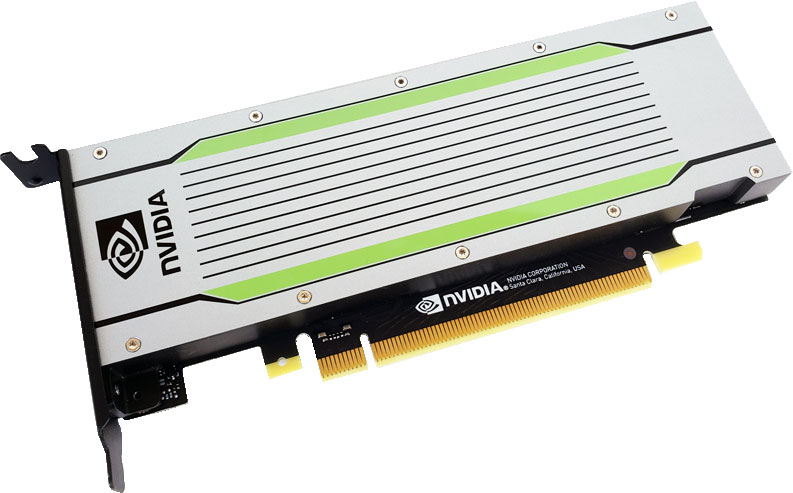

NVIDIA T4 |

NVIDIA A100 |

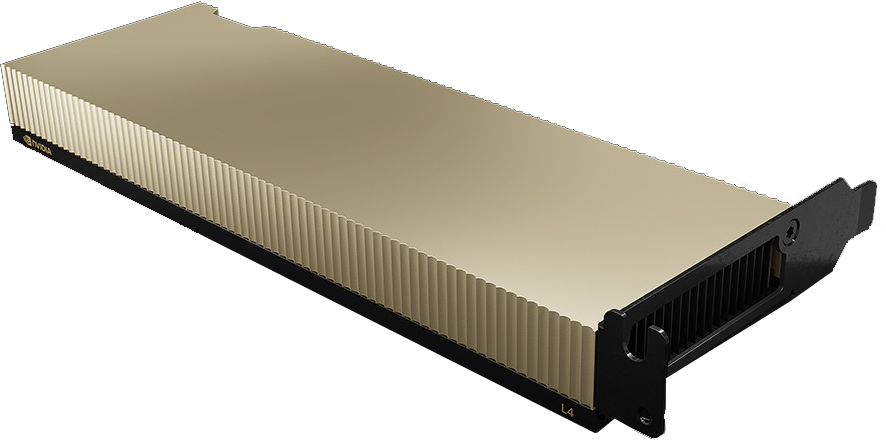

NVIDIA L4 |

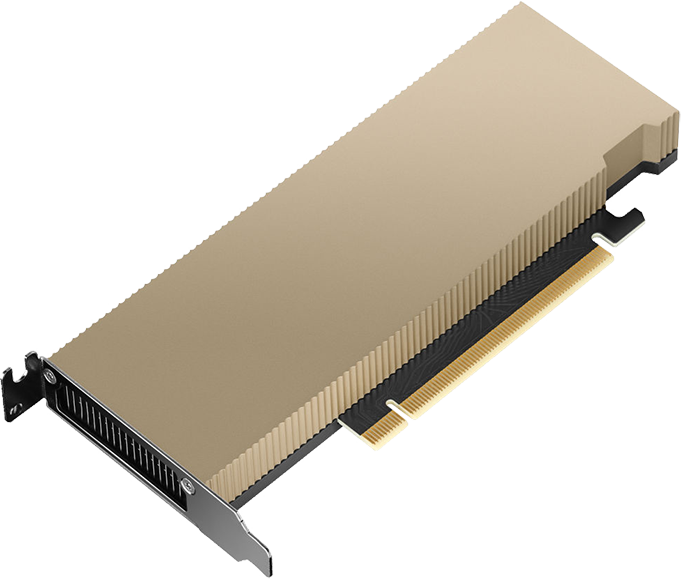

NVIDIA H100 |

|

|---|---|---|---|---|---|

| Architecture | Turing | Turing | Ampere | Ada Lovelace | Hopper |

| SMs | 72 | 72 | 108 | 60 | 114 |

| CUDA Cores | 4,608 | 2,560 | 6,912 | 7,424 | 18,432 |

| Tensor Cores | 576 | 320 | 432 | 240 | 640 |

| Frequency | 1,350 MHz | 1,590 MHz | 1,095 Mhz | 795 Mhz | 1,590 MHz |

| TFLOPs (double) | 8.1 | 65 | 9.7 | 489.6 (1:64) | 25.61 (1:2) |

| TFLOPs (single) | 16.3 | 8.1 | 19.5 | 31.33 (1:1) | 10.6 |

| TFLOPs (half/Tensor) | 130 | 65.13 TFLOPS(8:1) | 624 | 31.33 (1:1) | 204.9 (4:1) |

| Cache | 6 MB | 4 MB | 40 MB | 48 MB | 50 MB |

| Max. Memory | 24 GB | 16 GB | 40 GB | 24 GB | 80 GB |

| Memory B/W | 672 GB/s | 350 GB/s | 1,555 GB/s | 300 GB/s | 2,000 Gb/s |

The fastest and highest performance PC graphics card created, the NVIDIA Titan RTX is powered by Turing architecture and delivers 130 Tensor TFLOPs of performance, 576 tensor cores and 24GB of super-fast GDDR6 memory to your PC. The Titan RTX powers machine learning, AI and creative workflows.

It is hard to find a better option for dealing with computationally intense workloads than the Titan RTX. Created to dominate in even the most demanding of situations, it brings ultimate speed to your data centre. The Titan RTX is built on NVIDIA's Turing GPU Architecture. It includes the very latest Tensor Core and RT Core technology and is also supported by NVIDIA drivers and SDKs. This enables you to work faster and leads to improved results.

AI models can be trained significantly faster with 576 NVIDIA Turing mixed-precision Tensor Cores providing 130 TLOPS of AI performance. This card works well with all the best-known deep learning frameworks, is compatible with NVIDIA GPU Cloud and is supported by NVIDIA's CUDA-X AI SDK.

It allows for application acceleration, working significantly faster with 4609 NVIDIA Turing CUDA cores accelerating end-to-end data science workflows. With 24 GB GDD44 memory you can process gargantuan sets of data.

The Titan RTX reaches a level of performance far beyond its predecessors. Built with multi-precision Turing Tensor Cores, Titan RTX provides breakthrough performance from FP32, FP16, INT8 and INT4, making quicker training and inferencing of neural networks possible.

NVIDIA Tesla T4 GPUs power the planets most reliable mainstream servers. They can fit easily into standard data centre infrastructures. Designed into a low-profile, 70-watt package, T4 is powered by NVIDIA Turing Tensor Cores, supplying innovative multi-precision performance to accelerate a vast range of modern applications.

It is almost certain that we are heading towards a future where each of your customer interactions, every one of your products and services will be influenced and enhanced by Artificial Intelligence. AI is going to become the driving force behind all future business, and whoever adapts first to this change is going to hold the key to business success in the long term.

The NVIDIA T4 GPU allows you to cost-effectively scale artificial intelligence-based services. It accelerates diverse cloud workloads, including high-performance computing, data analytics, deep learning training and inference, graphics and machine learning. T4 features multi-precision Turing Tensor Cores and new RT Cores. It is based on NVIDIA Turing architecture and comes in a very energy efficient small PCIe form factor. T4 delivers ground-breaking performance at scale.

T4 harnesses revolutionary Turing Tensor Core technology featuring multi-precision computing to deal with diverse workloads. The T4 is capable of reaching blazing fast speeds.

User engagement will be a vital component of successful AI implementation, with responsiveness being one of the main keys. This will be especially apparent in services such as visual search, conversational AI and recommended systems. Over time as models continue to advance and increase in complexity, ever growing compute capability will be required. T4 provides up to massively improved throughput, allowing for more requests to be served in real time.

The medium of online video is quite possibly the number one way of delivering information in the modern age. As we move forward into the future, the volume of online videos will only continue to grow exponentially. Simultaneously, the demand for answers to how to efficiently search and gain insights from video continues to grow.

T4 provides ground-breaking performance for AI video applications, featuring dedicated hardware transcoding engines which deliver 2x the decoding performance possible with previous-generation GPUs. T4 is able to decode up to nearly 40 full high definition video streams, making it simple to integrate scalable deep learning into video pipelines to provide inventive, smart video services.

The NVIDIA A100 GPU provides unmatched acceleration at every scale for data analytics, AI and high-performance computing to attack the very toughest computing challenges. An A100 can efficiently and effectively scale to thousands of GPUs. With NVIDIA Multi-Instance GPU (MIG) technology, it can be partitioned into 7 GPU instances, accelerating workloads of every size.

The NVIDIA A100 introduces double-precision Tensor Cores, delivering the biggest milestone since double-precision computing was introduced in GPUs. The speed boost this offers can be immense, with a 10-hour double precision simulation running on NVIDIA V100 Tensor Core GPUs being cut down to only 4 hours when run on A100s. High performance applications are also able to leverage TF32 precision in A100s Tensor Cores to reach up to a 10x increased throughput for single-precision dense matrix multiply operations.

In modern data centres it is vital to be able to visualise, analysis and transform huge datasets into insights. However, scale-out solutions quite often end up being bogged down as datasets end up spread across many servers. Servers powered by the A100 deliver the necessary compute power, as well as 1.6TB/sec of memory bandwidth and huge scalability.

The NVIDIA A100 with MIG maximises GPU-accelerated infrastructure utilisation in a way never seen before. With MIG, an A100 GPU can be partitioned into up to 7 independent instances. This can give a multitude of users access to GPU acceleration for their applications and projects.

The NVIDIA L4 Tensor Core GPU, built on the NVIDIA Ada Lovelace architecture, offers versatile and power-efficient acceleration across a wide range of applications, including video processing, AI, visual computing, graphics, virtualisation, and more. Available in a compact low-profile design, the L4 provides a cost-effective and energy-efficient solution, ensuring high throughput and minimal latency in servers spanning from edge devices to data centers and the cloud.

The NVIDIA L4 is an integral part of the NVIDIA data center platform. Engineered to support a wide range of applications such as AI, video processing, virtual workstations, graphics rendering, simulations, data science, and data analytics, this platform enhances the performance of more than 3,000 applications. It is accessible across various environments, spanning from data centers to edge computing to the cloud, offering substantial performance improvements and energy-efficient capabilities.

As AI and video technologies become more widespread, there's a growing need for efficient and affordable computing. NVIDIA L4 Tensor Core GPUs offer a substantial boost in AI video performance, up to 120 times better, resulting in a remarkable 99 percent improvement in energy efficiency and lower overall ownership costs when compared to traditional CPU-based systems. This enables businesses to reduce their server space requirements and significantly decrease their environmental impact, all while expanding their data centers to serve more users. Switching from CPUs to NVIDIA L4 GPUs in a 2-megawatt data center can save enough energy to power over 2,000 homes for a year or offset the carbon emissions equivalent to planting 172,000 trees over a decade.

As AI becomes commonplace in enterprises, organizations need comprehensive AI-ready infrastructure to prepare for the future. NVIDIA AI Enterprise is a complete cloud-native package of AI and data analytics software, designed to empower all organizations in excelling at AI. It's certified for deployment across various environments, including enterprise data centers and the cloud, and includes global enterprise support to ensure successful AI projects.

NVIDIA AI Enterprise is optimised to streamline AI development and deployment. It comes with tested open-source containers and frameworks, certified to work on standard data center hardware and popular NVIDIA-Certified Systems™ equipped with NVIDIA L4 Tensor Core GPUs. Plus, it includes support, providing organizations with the benefits of open source transparency and the reliability of global NVIDIA Enterprise Support, offering expertise for both AI practitioners and IT administrators.

NVIDIA AI Enterprise software is an extra license for NVIDIA L4 Tensor Core GPUs, making high-performance AI available to almost any organization for training, inference, and data science tasks. When combined with NVIDIA L4, it simplifies creating an AI-ready platform, speeds up AI development and deployment, and provides the performance, security, and scalability needed to gain insights quickly and realize business benefits sooner.

Experience remarkable performance, scalability, and security for all tasks using the NVIDIA H100 Tensor Core GPU. The NVIDIA NVLink Switch System allows for connecting up to 256 H100 GPUs to boost exascale workloads. This GPU features a dedicated Transformer Engine to handle trillion-parameter language models. Thanks to these technological advancements, the H100 can accelerate large language models (LLMs) by an impressive 30X compared to the previous generation, establishing it as the leader in conversational AI.

NVIDIA H100 GPUs for regular servers include a five-year software subscription that encompasses enterprise support for the NVIDIA AI Enterprise software suite. This simplifies the process of adopting AI while ensuring top performance. It grants organizations access to essential AI frameworks and tools to create H100-accelerated AI applications, such as chatbots, recommendation engines, and vision AI. Take advantage of the NVIDIA AI Enterprise software subscription and its associated support for the NVIDIA H100.

The NVIDIA H100 GPUs feature fourth-generation Tensor Cores and the Transformer Engine with FP8 precision, solidifying NVIDIA's AI leadership by achieving up to 4X faster training and an impressive 30X speed boost for inference with large language models. In the realm of high-performance computing (HPC), the H100 triples the floating-point operations per second (FLOPS) for FP64 and introduces dynamic programming (DPX) instructions, resulting in a remarkable 7X performance increase. Equipped with the second-generation Multi-Instance GPU (MIG), built-in NVIDIA confidential computing, and the NVIDIA NVLink Switch System, the H100 provides secure acceleration for all workloads across data centers, ranging from enterprise to exascale.

The NVIDIA Pascal architecture enables the Tesla P100 to deliver superior performance for HPC and hyperscale workloads. With more than 21 teraflops of FP16 performance, Pascal is optimised to drive exciting new possibilities in deep learning applications. Pascal also delivers over 5 and 10 teraflops of double and single precision performance for HPC workloads.

The NVIDIA H100 is a crucial component of the NVIDIA data center platform, designed to enhance AI, HPC, and data analytics. This platform accelerates more than 3,000 applications and is accessible across various locations, from data centers to edge computing, providing substantial performance improvements and cost-saving possibilities.

Broadberry GPU Workstations harness the processing power of nVidia Tesla graphics processing units for millions of applications such as image and video processing, computational biology and chemistry, fluid dynamics simulation, CT image reconstruction, seismic analysis, ray tracing, and much more.

As computing evolves, and processing moves from the CPU to co-processing between the CPU and GPU's NVIDIA invented the CUDA parallel computing architecture to harness the performance benefits.

Speak to Broadberry GPU computing experts to find out more.

Accelerating scientific discovery, visualising big data for insights, and providing smart services to consumers are everyday challenges for researchers and engineers. Solving these challenges takes increasingly complex and precise simulations, the processing of tremendous amounts of data, or training sophisticated deep learning networks These workloads also require accelerating data centres to meet the growing demand for exponential computing.

NVIDIA Tesla is the world's leading platform for accelerated data centres, deployed by some of the world's largest supercomputing centres and enterprises. It combines GPU accelerators, accelerated computing systems, interconnect technologies, development tools and applications to enable faster scientific discoveries and big data insights.

At the heart of the NVIDIA Tesla platform are the massively parallel PU accelerators that provide dramatically higher throughput for compute-intensive workloads - without increasing the power budget and physical footprint of data centres.

Our Rigorous Testing

Our Rigorous TestingBefore leaving our UK workshop, all Broadberry server and storage solutions undergo a rigorous 48 hour testing procedure. This, along with the high-quality industry leading components ensures all of our server and storage solutions meet the strictest quality guidelines demanded from us.

Un-Equaled Flexibility

Un-Equaled FlexibilityOur main objective is to offer great value, high-quality server and storage solutions, we understand that every company has different requirements and as such are able to offer un-equaled flexibility in designing custom server and storage solutions to meet our clients' needs.

We have established ourselves as one of the biggest storage providers in the UK, and since 1989 supplied our server and storage solutions to the world's biggest brands. Our customers include: